Although we probably will see additional metadata possibilities for ‘marking’ items, See Also already displays an uncanny ability to sense contextual relationships (semantics? meaning?). Peter, you wondered whether giving special weight to titles of documents, or providing other metadata hints, would result in better performance by DEVONthink. There are some things that I organize carefully into groups, so that I – a mere human – can find them myself: contacts, drafts, and that sort of thing. Spotlight simply can’t do that sort of ‘thinking.’ Intelligent features such as See Also mean that DEVONthink will thrive in Tiger. That doesn’t hinder DEVONthink from acting as a very useful research assistant. At the moment, for example, I’ve got over 3,000 items in my “Edit This” imports group. I haven’t really been able to keep my database content well organized in groups. What I really love is that DEVONthink can often suggest similarities or relationships that I hadn’t realized.

It can find similar items regardless of how they are grouped. Clearly, DEVONthink is looking beyond the hierarchical organization of items, which I find very useful indeed. Even large documents that contain some of the terms in the base document may rank low – again, the relevancy rankings really do reflect the fact that DEVONthink is looking at the contents of the database to find semantic relationships. My main database contains a good many thousand items, and over 14,300,000 words.

“See Also” relavancy ratings show the document you are viewing as most similar in context to itself – which is right on. It seems to me that searches in DT are likely to be weighted by the frequency of occurence of the search string in documents.īut as your database grows larger, you will probably find that isn’t the case for “See Also.” This is a feature I use a great deal, and it does often display striking intelligence. I tried to find the answers to these questions (that seem sort of fundamental to me) in the documentation or here in the forums (using terms such as ‘score’, ‘relevan*’, ‘title’) without finding answers. Is there (will there be) some way of giving DT ‘hints’ about relevancy? For example by identifying ‘key’ phrases manually in a document? Shouldn’t classification have this effect? I could understand that weighting headings inside a document might be difficult for text, but what about RTF (where headings are more readily distinguished)? Is there (will there be) attention paid to e.g. When I search for a phrase, does the score reflect simply the frequency of the individual words? Or is there some attention paid to proximity in the frequency calculation? Could there be?

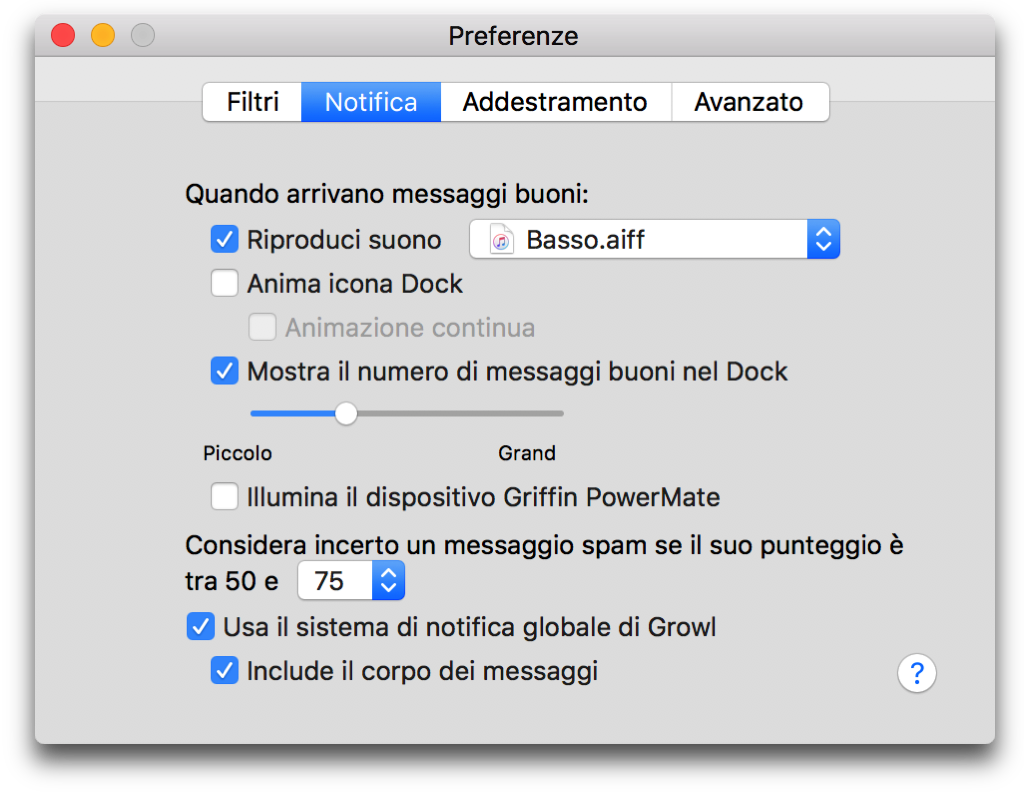

SPAMSIEVE SCORE MANUAL

It seems to be ignoring my manual classification into folders when searching for documents. It seems to me that DT is not taking notice of relevancy signals other than frequency of words in the documents themselves. The same list seems to appear in the Drawer when the ‘see also’ button is pressed. But none of these is ANY of the documents (all replicants) in the folder with a name that contains the words I searched for. I click on that document in the list of documents returned by the search and the drawer to the right of the window fills with a list of documents. But DT has returned – at the bottom of the relevancy list – the replicant in an earlier folder in the hierarchy whose name bears no relation to the content I searched for. The title of the document contains the words I searched for and one of the folders in which it is located (as a replicant) has a name that contains the words in the phrase for which I searched (in other words, I have classified this document to a folder named for its relevant content). Although it is listed near the bottom of the list, it is located in two DT folders (as replicants). I scroll down through the returned documents to the bottom of the list and choose a document I believe is more relevant than DT believes. For example brief documents with the phrase in the title of the document and whose content includes one or two headings using the phrase (RIGHT ON THE NOSE for a relevancy score) are found at the bottom of the list. This seems to be the case since I regularly see the LONGEST document in a subject domain returned at the top of the list, even when it is NOT the most relevant by any semantic measure. Starting with the toolbar search field (say, searching ALL for an Exact phrase of one or more words): how are the documents returned ranked? By simple frequency of occurrence? They range in size from 200 word press releases to long monographs. I have a small database of about 2-300 documents dealing with issues in the same domain. I’m new to DT but I’d like to know how this works because I’m finding the ‘relevance’ displays confusing.

0 kommentar(er)

0 kommentar(er)